Over the past decade, AI has made remarkable progress in understanding and reasoning over text and pixels. Yet next-generation intelligence—spatial intelligence, embodied intelligence, physical reasoning, the ability to interact with the world—demands something fundamentally different.

It demands Xperience.

Today's models still lack the right data to learn how humans perceive, move, manipulate, and interact within complex physical environments. Existing datasets help, but they are not enough: not large enough, not rich enough, and often not grounded in real human behavior. In many cases, we are still unsure what kind of data intelligence truly needs.

At Ropedia, we believe the answer lies in a simple but powerful idea:

Human-level intelligence emerges from large-scale records of real human Xperience.

Human Xperience: Beyond Perception

We use Xperience deliberately. The X stands for the many dimensions of human experience, what humans see, hear, touch, move, and interact with as they live and act in the real world.

Human Xperience is not just perception. It is perception tightly coupled with action, intention, and physical consequence.

To capture it faithfully, we must start from the perspective where experience originates.

Xperience from the Egocentric Perspective

If we want AI systems that understand and operate in the physical world, we must teach them from the viewpoint that best reflects real human behavior: the egocentric perspective.

From the egocentric perspective, human experience naturally includes:

- Rich human-object interactions

- Spatial context and scene affordances

- Social signals from other people

- Fine-grained motor actions unfolding over time

This is exactly the information an intelligent system needs to learn how to move, manipulate, and reason about the physical world.

And just as importantly:

Egocentric capture scales.

A body-mounted system, without external infrastructure or environmental instrumentation, enables truly in-the-wild data collection across homes, workplaces, factories, hospitals, and beyond. This scalability unlocks diversity, realism, and long-tail behaviors that are critical for robust generalization.

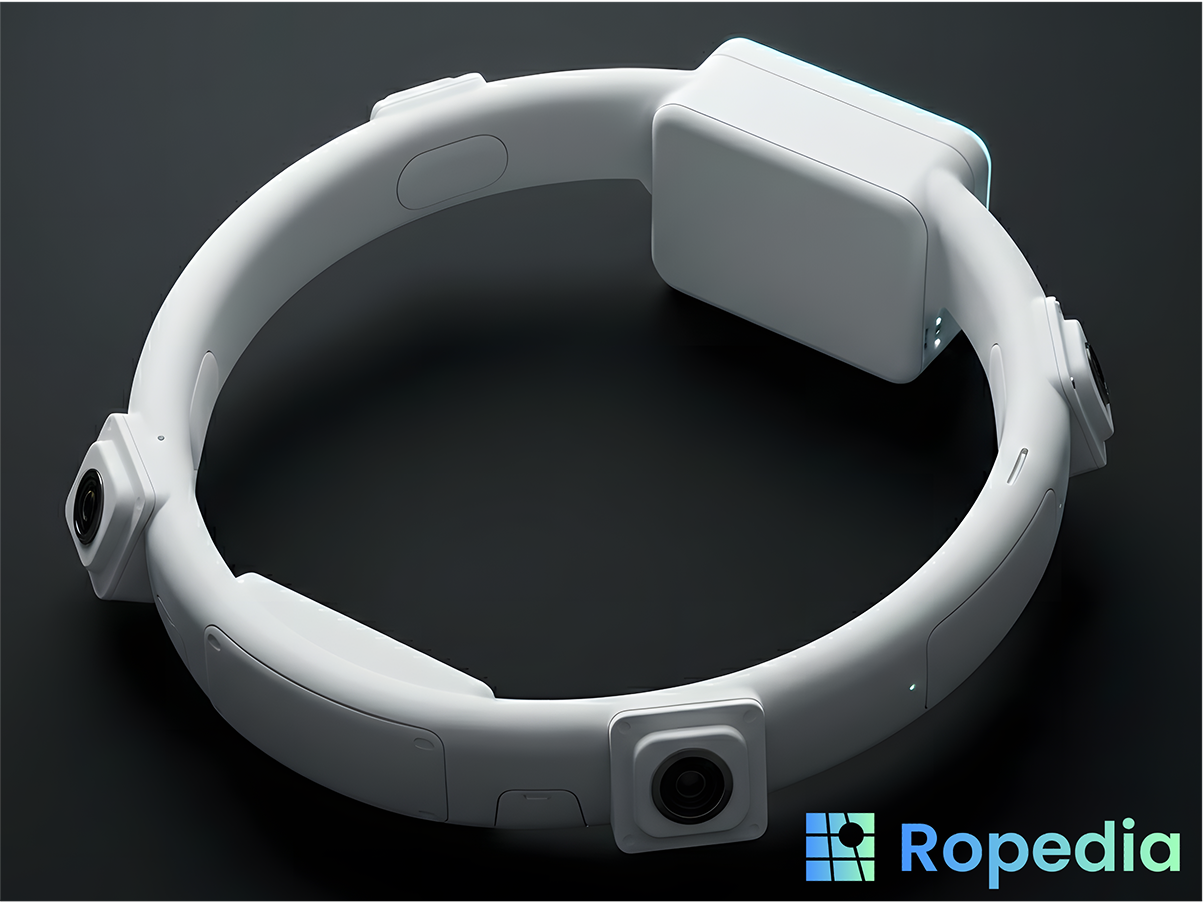

HOMIE: Human-centric OMni Interaction & Experience Capture

To make large-scale human Xperience capture possible, we built HOMIE, a full-stack hardware-software platform designed for frictionless, lossless human-centric capture.

Hardware: Head-Mounted, Multi-Modal Xperience Capture

HOMIE's hardware is engineered for accurate spatial understanding and long-term real-world use.

- Lightweight, ergonomic head-mounted form factor

- Multi-modal sensing for rich physical and spatial signals

- Robust ego-motion tracking and localization

- Long battery life for all-day capture

- Precise sensor synchronization for high-fidelity reconstruction

Our goal is simple:

Make capturing human Xperience as natural as wearing glasses.

Software: Spatial Foundation Models for Automatic Annotation

Raw experience alone is not enough. Intelligence requires structure.

HOMIE includes a suite of spatial foundation models that automatically transform captured Xperience into machine-readable annotation, including:

- Spatial localization and stereo depth

- Hand-object interaction tracking

- Full-body motion capture

- Panoramic scene perception

- …and more to come

Together, these models convert unstructured human experience into structured interactive intelligence datasets, ready for training the next generation of intelligent systems.

ReXperience: How AI Learns from Human Xperience

Intelligence does not emerge from passive observation alone. It emerges through ReXperience: the process by which AI systems repeatedly relive, model, and internalize human Xperience.

We see immediate impact of ReXperience across three frontier directions:

1. World Models: Learning Predictive Intelligence from Human Xperience

Egocentric human Xperience provides realistic trajectories for learning how environments evolve and how actions lead to consequences. This grounds world models in real perception and interaction, improving physical prediction and reasoning. At scale, it enables world models that generalize across diverse, unstructured real-world scenarios.

2. Real2Sim: Building Better Simulations from Human-Captured Reality

Simulation remains essential for robot learning, but its fidelity is fundamentally limited by the realism of its assets. Human Xperience supplies natural motion patterns, contact dynamics, and task distributions that simulations often miss. By grounding simulation in real human behavior, Real2Sim produces training environments that are more representative, diverse, and effective.

3. VLA Models: Scaling Generalization through Egocentric Action Grounding

Large-scale egocentric Xperience exposes VLA models to how humans actually perceive, act, and describe tasks in real environments. This grounding leads to much stronger generalization across new tasks, instructions, and scenes. As Xperience scales, so does the model's ability to adapt and act intelligently in the open world.

Our Mission

If we want AI that moves like us, understands like us, and helps us in everyday physical tasks, then its intelligence must be built from human experience itself. At Ropedia, we are building systems that capture, structure, and transform human Xperience into the foundation for machine intelligence.

Intelligence begins with Xperience. We're here to scale it.

If you are building world models, robotics systems, or embodied agents, and care deeply about learning from real human experience, we'd love to talk.

Reach out to us at contact@ropedia.com or follow our updates at @ropedia_ai.

Be the first to get updates and early access to our device and data.